Image Analytics Processes

The Illustrated Newspaper Analytics project uses a set of algorithms to variously experiment with, identify, extract, and analyze the visual contents within its data. Some of these processes have been illuminating failures. Others have suggested more promising workflows and intellectual questions to pursue. Our basic question remains: How can computer vision and image processing techniques be adapted for large-scale interpretation of these historical materials?

Figure Extraction

Our data originally derives from newspapers in the British Library, digitized in the 2000s, and now available as facsimile images of historical newspaper pages along with their marked up text in XML. Illustrated newspaper pages combine sections of image and text, though not always in predictable ways. While some page zoning has been done in the XML, our initial step is to identify and extract distinct image areas from the page facsimiles. This lets us harvest illustrations as well as to compare the relative amounts of text and image on a given page, as in the figure on the right.

Our data originally derives from newspapers in the British Library, digitized in the 2000s, and now available as facsimile images of historical newspaper pages along with their marked up text in XML. Illustrated newspaper pages combine sections of image and text, though not always in predictable ways. While some page zoning has been done in the XML, our initial step is to identify and extract distinct image areas from the page facsimiles. This lets us harvest illustrations as well as to compare the relative amounts of text and image on a given page, as in the figure on the right.

Image Matching

Perhaps the simplest analytical function for a computer is to match identical things. A research team at the Bodleian has developed image-matching techniques to identify the re-use of stock woodcuts in early modern broadsheets and ballads (MacLeish). Yet image matching can be computationally intensive on larger data sets. It can also be undertaken by identifying and comparing features within images, such as using image segmentation or GIST descriptors.

Face Detection

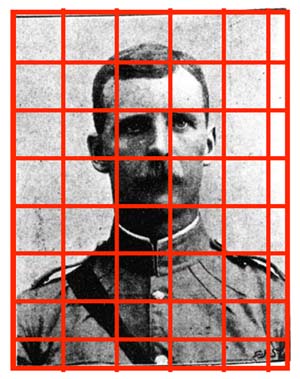

Largely thanks to personal digital photography and social media, face detection and recognition software have become perhaps the most familiar of image analytics techniques. There are several proprietary and open-source algorithms for facial recognition. Our experiments have largely used the Haar Feature-based Cascade Classifiers algorithm in the OpenCV image processing library, testing results from its default settings as well as after supplying training data from nineteenth-century illustrations, including trials of wood-engraved images as well as halftones.

Largely thanks to personal digital photography and social media, face detection and recognition software have become perhaps the most familiar of image analytics techniques. There are several proprietary and open-source algorithms for facial recognition. Our experiments have largely used the Haar Feature-based Cascade Classifiers algorithm in the OpenCV image processing library, testing results from its default settings as well as after supplying training data from nineteenth-century illustrations, including trials of wood-engraved images as well as halftones.

Image Segmentation

Just as with recognizing faces, it is possible to train algorithms to identify objects or consistent patterns in image data for other purposes. Object and face recognition techniques begin with image segmentation, or breaking down an image into its visual components. Applications include classifying things within images, matching similar images, as well as helping self-driving cars learn to navigate a visual environment. Image segmentation methods and applications are a major focus for NC State’s researchers in Electrical and Computer Engineering.

Halftone Detection

Image processing techniques can be used to distinguish between line engravings and halftone images by comparing their backgrounds, separating linear patterns from the characteristic dots of a halftone. We have adapted the work of Liu et al. in algorithmically processing images to identify and sort them based on their production methods, tracing how photo-process methods emerged in the latter decades of the nineteenth century.

Machine Learning

An emerging area in image classification, machine learning techniques are different from rule-based classification in using lots of different computational inputs to evaluate and agree on a value. We have experimented with the open source software Caffe out of UC Berkeley, though line-engraved images present special difficulties if the algorithms are largely trained on photographs.

GIST Descriptors

In plainest language, the GIST of an image is exactly that: its basic shape. More technically, GIST measures the overall vector of an image. We can compute GIST features for all the images in the collection and then sort in a couple of ways. First, by doing a kind of visual topic modeling, where everything gets into a given number of categories by similiarity; second, by measuring the GIST of one image and computing degrees of similarity or difference from that image.